The Triple Fit Framework for AI Product Strategy

In just 4 months, I watched 6 promising products fail — same root cause, every time.

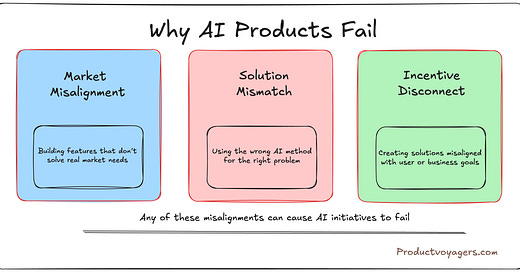

The most common reason AI initiatives fail isn't technical—it's strategic.

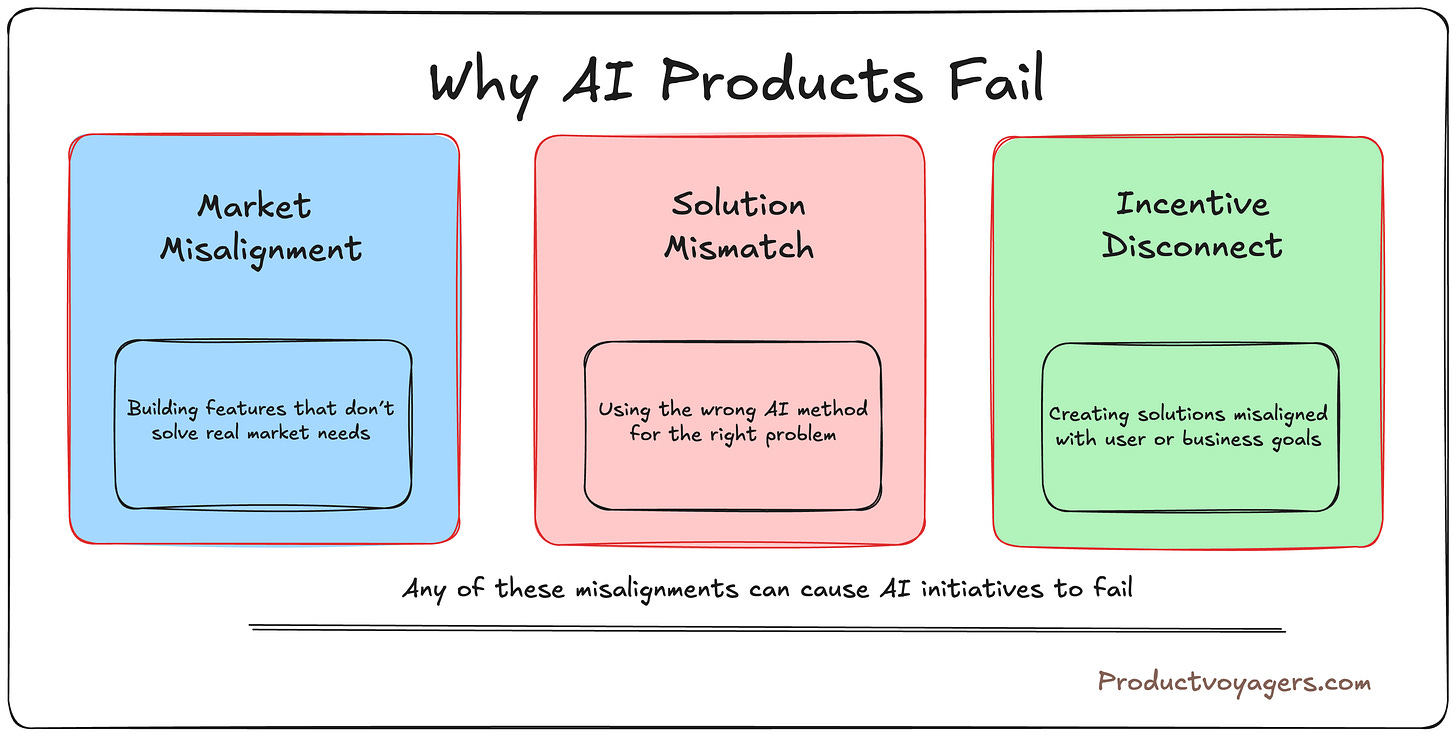

After observing multiple AI products from concept to market, I've found that successful AI products require alignment across three critical dimensions: market fit, solution fit, and incentive fit. I call this the Triple Fit Framework.

These failures manifest differently across organizational contexts, but the patterns remain consistent.

In financial services, market misalignment often appears as over-engineering solutions for problems users don't prioritize.

In healthcare, solution mismatch frequently emerges when technical approaches don't account for clinical workflow realities.

In manufacturing, incentive disconnects commonly arise when operational staff perceive AI as threatening rather than enhancing their expertise.

By systematically addressing all three dimensions, we can dramatically increase the success rate of AI initiatives.

Triple Fit Framework

1. Market Fit: Finding the Right Problem

Market fit answers the fundamental question: "Are we solving a problem worth solving?"

Key Assessment Dimensions:

🔍 Problem Significance

Key Questions: How painful is this problem for users? How frequently do they encounter it? Who is your ICP (Ideal Customer Profile)?

Assessment Methods: User interviews, workflow analysis, support ticket analysis

🌱 Market Readiness

Key Questions: Are users actively seeking solutions? Are they willing to change behavior?

Assessment Methods: Competitor adoption rates, market research, technology acceptance metrics

🏆 Competitive Landscape

Key Questions: What existing solutions address this need? What are their limitations?

Assessment Methods: Competitive analysis, feature comparison, user satisfaction research

📜 Regulatory Environment

Key Questions: What compliance requirements impact solutions in this space?

Assessment Methods: Regulatory analysis, legal consultation, compliance assessment

⚠️ Red Flags for Poor Market Fit:

Users express interest but not urgency ("That would be nice to have")

Problem requires extensive education to recognize

Existing solutions are "good enough" for most users

Regulatory barriers create excessive friction for adoption

2. Solution Fit: Choosing the Right Approach

Solution fit answers the question: "Is AI the right approach, and if so, which specific AI capabilities are appropriate?"

Key Assessment Dimensions:

⚙️ Technical Feasibility

Key Questions: Can current AI capabilities reliably address this problem? What accuracy levels are achievable?

Assessment Methods: Technical prototyping, benchmark testing, data quality assessment

📊 Data Availability

Key Questions: Do we have access to the data needed to train effective models? Can we collect it?

Assessment Methods: Data inventory, collection feasibility analysis, privacy impact assessment

🔄 Alternative Approaches

Key Questions: Could simpler non-AI approaches solve this problem effectively?

Assessment Methods: Comparative solution analysis, cost-benefit assessment

👥 Augmentation vs. Automation

Key Questions: Should AI completely automate the task or augment human capabilities?

Assessment Methods: Task decomposition, error impact analysis, user skill assessment

⚠️ Red Flags for Poor Solution Fit:

Required accuracy levels exceed current technical capabilities

Insufficient training data for the specific domain

Simpler approaches would deliver similar results

Solution requires complete automation where human oversight is essential

3. Incentive Fit: Aligning Stakeholder Motivations

Incentive fit answers the question: "Why would users, organizations, and other stakeholders adopt and sustain this solution?"

Key Assessment Dimensions:

👤 User Incentives

Key Questions: How does this solution benefit individual users? Does it threaten their status or expertise?

Assessment Methods: User motivation research, usage pattern analysis, adoption forecasting

🏢 Organizational Incentives

Key Questions: How does this align with business objectives? What metrics will demonstrate value?

Assessment Methods: Business case analysis, ROI modeling, value proposition validation

🌐 Ecosystem Incentives

Key Questions: How does this impact partners, suppliers, and other stakeholders?

Assessment Methods: Ecosystem mapping, partner impact assessment, value chain analysis

🚀 Implementation Incentives

Key Questions: Who needs to support implementation? What motivates them?

Assessment Methods: Stakeholder mapping, change readiness assessment, influence analysis

⚠️ Red Flags for Poor Incentive Fit:

Solution threatens user status or expertise without clear benefits

ROI requires long-term measurement but organization needs short-term wins

Key stakeholders bear implementation costs but don't share in benefits

Success metrics don't align with organizational priorities

Applying Triple Fit Framework in Practice

The Triple Fit Framework provides a practical approach to evaluating and implementing AI initiatives. By systematically assessing market fit, solution fit, and incentive fit, organizations can identify potential challenges early and develop strategies to address them.

The framework is iterative by design—insights gained in one dimension often require revisiting assumptions in others. For example, discovering that user incentives don't align with the proposed solution may necessitate rethinking the market need or modifying the technical approach.

Successful implementations typically involve cross-functional teams with expertise across all three dimensions, rather than relying solely on technical teams to drive AI initiatives.

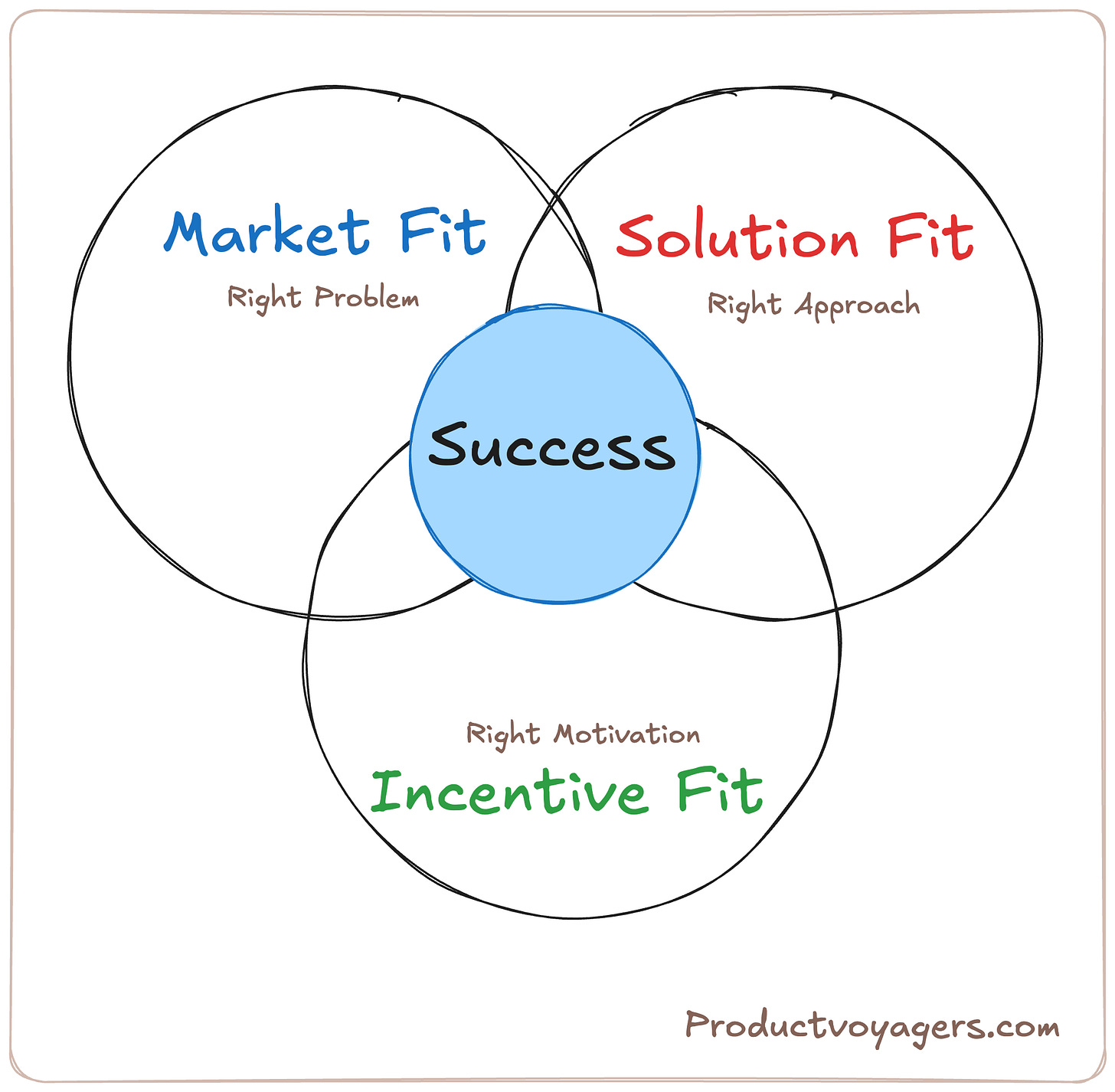

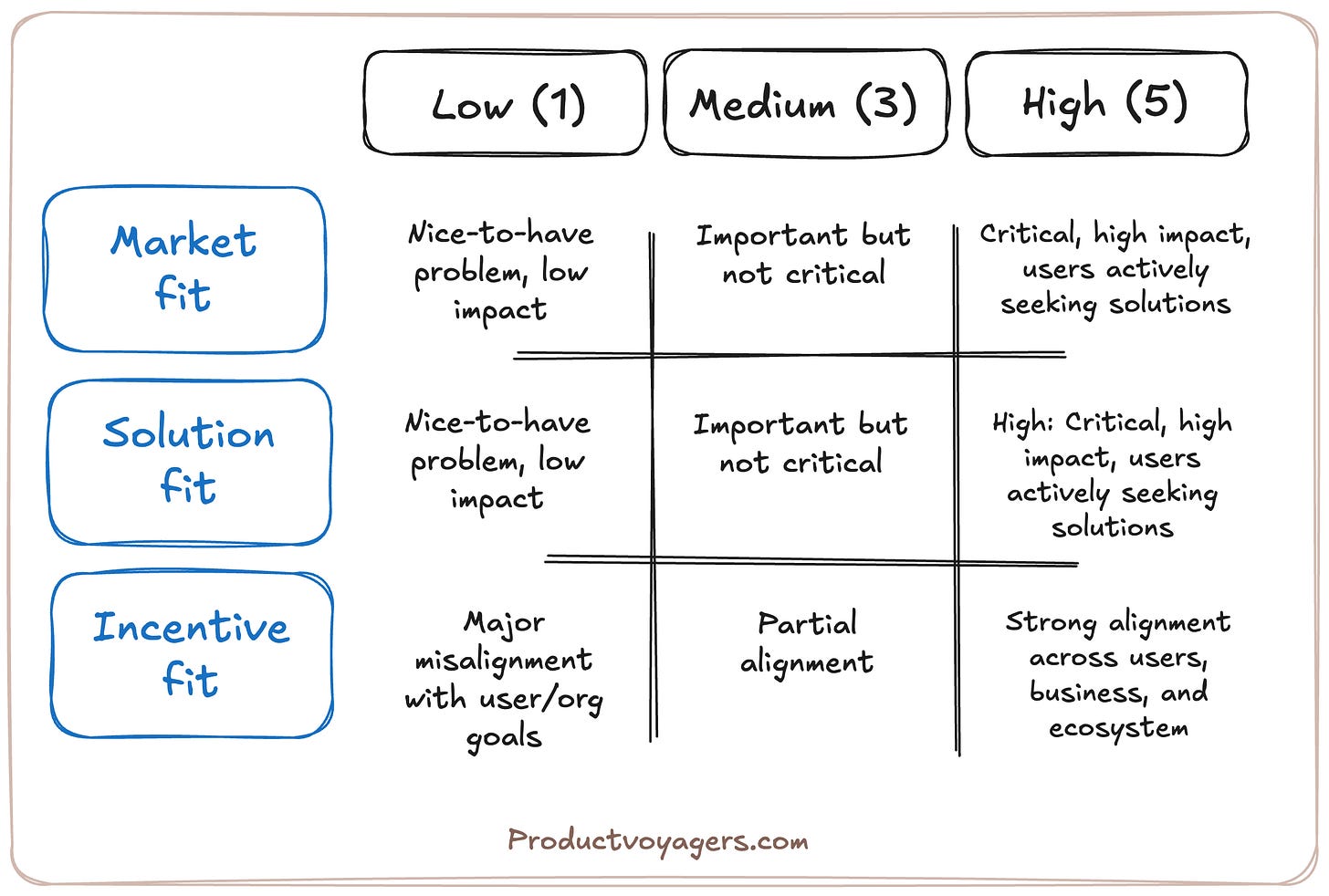

Triple Fit Matrix: A Strategic Tool

To operationalize this framework, I use a Triple Fit Matrix that scores potential AI initiatives across each dimension:

A successful AI product strategy requires a minimum score of 3 in each dimension, with at least one dimension scoring 5. This prevents pursuing initiatives that excel in one dimension but fail in others.

Triple Fit Framework Across Different Sectors

The Triple Fit Framework can be adapted to various organizational contexts.

Here’s how it plays out in three non-tech sectors—where AI is often harder to justify, and success is tougher to measure. (Real examples below)

Government Sector Applications

Market Fit Considerations:

Public value often supersedes commercial metrics

"Users" include citizens, officials, other agencies, and elected representatives

Problem validation should include public consultation and policy alignment

Success metrics typically emphasize societal impact over efficiency gains

Solution Fit Considerations:

Legacy system integration often presents significant challenges

Data privacy and security standards are typically more stringent

AI transparency and explainability requirements are frequently non-negotiable

Solutions must accommodate diverse user technical capabilities

Incentive Fit Considerations:

Career advancement incentives differ from private sector

Budget allocation processes may disconnect costs from benefits

Cross-agency coordination often creates complex stakeholder dynamics

Public scrutiny creates different risk tolerance profiles

Example: A tax authority implemented a fraud detection AI system that excelled technically but struggled with adoption.

Triple Fit analysis revealed that while the market need was clear, the incentive structure for auditors was misaligned—success metrics focused on fraud detection volume rather than accuracy, creating resistance from staff who had to investigate false positives.

Realigning performance metrics to emphasize precision over volume improved adoption from 35% to 78%.

Banking Sector Applications

Market Fit Considerations:

Regulatory compliance often shapes market needs

Customer experience expectations are increasingly set by tech companies

Risk management considerations frequently outweigh efficiency gains

Legacy processes may obscure genuine user pain points

Solution Fit Considerations:

Explainability requirements are particularly stringent for credit decisions

Data quality varies dramatically across banking systems

Real-time performance requirements often limit algorithm options

Integration with core banking systems creates technical constraints

Incentive Fit Considerations:

Department silos create implementation challenges

Compensation structures may not reward innovation adoption

Risk aversion culture can impede experimentation

Customer retention metrics may not capture value creation

Example: A global bank's AI-powered customer service chatbot achieved only 12% usage despite strong market fit (customer demand for 24/7 service) and solution fit (90% accuracy on common queries).

Triple Fit analysis revealed incentive misalignment: branch managers were evaluated on relationship-based metrics that the chatbot threatened to disrupt.

By creating shared success metrics and positioning the technology as freeing staff for higher-value customer interactions, adoption increased to 67%.

Oil & Gas Sector Applications

Market Fit Considerations:

Safety implications often outweigh efficiency gains

Operational continuity requirements create risk aversion

Field vs. office workforce dynamics affect problem prioritization

Asset lifespans measured in decades impact innovation adoption

Solution Fit Considerations:

Harsh operating environments create unique technical constraints

Sensor data quality and availability vary dramatically across assets

Remote locations may limit connectivity and computing resources

Legacy equipment integration creates implementation challenges

Incentive Fit Considerations:

Operational KPIs may not reward preventative improvements

Safety culture can either accelerate or impede AI adoption

Field workforce expertise is often undervalued in solution design

Corporate/field alignment challenges affect implementation success

Example: An Oil/Gas major's predictive maintenance AI system for offshore platforms delivered excellent technical performance in testing but faced field resistance.

Triple Fit analysis identified that while the market need was valid (preventing costly downtime) and the solution worked technically, the incentives were misaligned—maintenance crews perceived the AI as threatening their expertise and job security. By reframing the technology as an "expert assistant" and involving crews in model training and refinement, adoption increased from 23% to 81%.

Leadership Principles for Triple Fit Success

Effective application of the Triple Fit Framework requires intentional leadership approaches that balance technical expertise with strategic insight. Based on successful implementations across multiple sectors, these core principles emerge:

🎯 Start with Market, Not Technology

The most common mistake is starting with AI capabilities rather than market needs.

Leaders should insist on rigorous market fit validation before approving technical investments. This means demanding evidence of user pain points, documenting workflows, and quantifying the impact of current solutions before discussing AI approaches.

🤝 Design for Incentives from Day One

Incentive structures should shape product design from the beginning, not be considered as adoption "marketing" after development.

Leaders should ask: "Who needs to change behavior for this to succeed?" and "What motivates them?" before approving technical requirements.

📡 Build Feedback Loops Across All Three Dimensions

Create explicit mechanisms to detect emerging misalignment in any dimension as the product evolves.

Leaders establish balanced metrics across all three dimensions rather than focusing exclusively on technical performance.

👥 Embrace Hybrid Human-AI Approaches

The most successful AI products combine AI capabilities with human expertise rather than pursuing full automation.

Leaders should challenge teams to articulate how the solution enhances human capabilities rather than replaces them.

🔄 Treat Implementation as Continuous Learning

Successful Triple Fit implementations treat the framework as an ongoing diagnostic tool rather than a one-time assessment.

Leaders should establish regular review cycles that reassess alignment across all three dimensions as users, markets, and technologies evolve.

Conclusion: Build Smart, Not Just Smart Tech

AI doesn’t fail because it’s not powerful—it fails because it’s not aligned.

The Triple Fit Framework helps you ask the right questions before you build, not after it’s too late.

Get the strategy right, and the tech will follow. Ignore it, and you’ll keep shipping solutions no one adopts.

And at the end; organizations that master this strategic alignment create three distinct competitive advantages:

Resource Efficiency: By identifying and addressing misalignments early, they avoid costly investments in technically impressive solutions that fail to deliver value.

Adoption Velocity: By designing for user and organizational incentives from the beginning, they accelerate time-to-value compared to competitors focused primarily on technical performance.

Sustainable Impact: By continuously monitoring alignment across all three dimensions, they create solutions that evolve with changing user needs, technical capabilities, and organizational priorities.

Curious about AI Agents, Automation and ML Selections?

Grab a coffee and dive into these 3 deep reads 👇

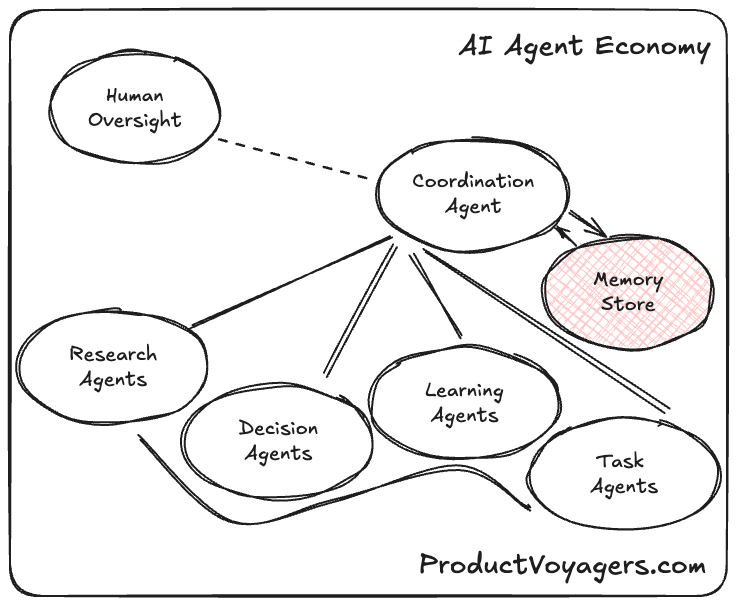

Product-Led AI Agent Economy

For the last two decades, APIs were the backbone of digital transformation. The API economy, once valued at $5.21 trillion (Deutsche Bank, 2023), was built on structured requests and predictable responses. But the next digital revolution is here—one that moves beyond static APIs into a world of

From AI Models to Product Value

This is a free edition of The Product Voyagers. To unlock full playbooks, tools, templates and expert takes — go Prime.

The Million-Dollar ML Decision Framework Every Product Manager Needs

This is a free edition of The Product Voyagers. To unlock full playbooks, tools, templates and expert takes — go Prime.